In recent years, artificial intelligence has been one of the most talked-about technologies, praised for its potential to significantly enhance our work processes. Inspired by the prevailing hype, many companies are now integrating AI into their applications and workflows.

But amidst all this buzz, a crucial conversation gets overlooked: AI’s security risks. As we embrace the technological advancements AI brings, it’s vital that we also address the potential security risks and vulnerabilities that accompany them.

Read the summary

- AI systems are susceptible to security vulnerabilities due to errors, adversarial attacks, and supply chain risks, highlighting the need for secure development practices.

- The AI supply chain's dependence on untrusted external components introduces risks such as data poisoning and model backdoors, which can compromise system integrity.

- Strategies for improving AI security include full supply chain control, provenance management, implementing an AI Bill of Materials, and conducting rigorous testing and benchmarking.

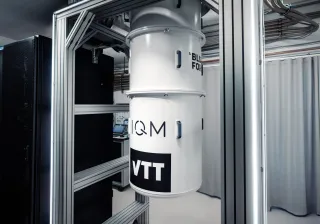

- VTT research teams offer expertise in developing security solutions for AI systems, focusing on comprehensive testing protocols to ensure systems meet high standards of safety and performance.

This summary is written by AI and checked by a human.

Are AI systems infallible?

“Unfortunately, in the rush to bring AI applications to market, security is frequently an afterthought,” says Samuel Marchal, Cybersecurity engineering & automation research team leader at VTT.

This oversight is particularly concerning, as current AI systems are prone to errors. And the impacts are diverse and devastating, ranging from physical and mental harm to security hazards, bias and discrimination, and privacy leaks.

“Tesla’s remote parking feature, for instance, has been alleged to cause crashes,” Marchal explains.

“Similarly, social media algorithms have been shown to push self-harm content to teenagers, highlighting just a few of the many potential risks associated with AI systems.”

While most AI errors occur unintentionally, others can result from deliberate actions. These errors, known as adversarial machine learning attacks, are designed to deceive or confuse the system, and they can occur at various stages of the development and deployment of AI systems.

Untrusted, external sources increase AI supply chain risks

The AI supply chain is susceptible to various risks due to its reliance on multiple interconnected components and external sources. This complex supply chain encompasses all the steps and components involved in creating AI models, including training datasets, machine learning frameworks and foundation models.

“These systems depend on external components that come from open-source, collaborative and often untrusted sources,” Marchal explains.

Relying on these untrusted sources can make the AI system vulnerable to supply chain attacks.

“There is no way to fully control these external components, which means they can potentially be compromised. Consequently, if any step in the supply chain is affected, it jeopardises the integrity of the entire system,” Marchal says.

The security risks within the AI supply chain are diverse

In addition to traditional information security risks, AI supply chains also face vulnerabilities specific to AI systems, such as data poisoning, model backdoors and training algorithm manipulation.

“Data poisoning can occur when training datasets are compromised by injecting malicious or misleading data”, says Marchal.

“For instance, spammers might manually report spam messages as “non-spam”, leading to mislabelling. Those mislabelled messages are then incorporated into the dataset used to retrain other spam filters. As a result, when the spam filter is re-trained with this manipulated data, it becomes less effective, potentially missing future occurrences of such spam messages.”

AI models can also be compromised through methods such as data poisoning or the use of backdoors.

“Encoded backdoors have been used to manipulate, for example, a self-driving car’s road sign recognition system to incorrectly recognize road signs,” Marchal explains.

“If a pre-trained, 'foundation AI model’ is compromised by a backdoor or it contains biases, the fine-tuned model is likely to inherit these vulnerabilities, perpetuating the issue."

A recent example of this is the Chinese artificial intelligence company DeepSeek, whose models have been accused of bias, hiding information as well as providing wrong information on purpose.

AI training algorithms are also vulnerable to manipulation.

“Objective function compromise occurs when the mathematical expression that the algorithm seeks to optimise has been altered or manipulated. As a result, bias or noise could be introduced into the function, guiding the model towards incorrect conclusions or even leading it to adopt a secondary, malicious objective, such as data or privacy leakage,” explains Marchal.

All of the aforementioned risks can be very challenging to detect, rendering conventional mitigation of supply chain attacks inadequate.

Four strategies to mitigate risks and improve the security of AI supply chains

Although AI systems encounter numerous risks, there are a variety of strategies available to enhance their integrity, reliability and accountability.

“The best option would be to establish full control of the supply chain; everything from data to machine learning frameworks to training one’s own models,” Marchal points out.

“However, this requires substantial expertise and financial resources, making it an unfeasible option for most companies.”

As an alternative, Marchal suggests investing in provenance management, which involves relying on trusted sources to gain insights into the origin, history and transformations of the data and models used in your AI system ¬¬– though this approach is not without its limitations.

“This approach significantly limits your options, as there are only a few trusted sources available for AI models or datasets,” says Marchal.

Another effective solution for enhancing the security of AI systems is the implementation of an AI Bill of Materials. This framework enables organisations to track the various components in their AI systems. While an AI Bill of Materials does not eliminate security risks completely, it serves as a valuable tool for mitigation.

“When Common Vulnerabilities and Exposures (CVE) are reported, having an AI Bill of Materials allows you to quickly identify whether a vulnerability affects your systems and to apply security patches immediately when they become available,” Marchal says.

Adapting rigorous testing and benchmarking is another essential strategy for enhancing AI system security.

“Before integrating external data and AI models into your applications, it is crucial to assess their security and integrity,” Marchal explains.

“Establishing quantifiable metrics – including security, resilience, privacy preservation and fairness – provides a clear framework for evaluation. This approach not only ensures that the components meet stringent standards before deployment but also makes comparing different solutions easier.”

VTT offers valuable expertise for organisations looking to enhance the security and reliability of their AI systems.

“We develop comprehensive testing solutions and protocols tailored to evaluate the security and integrity of AI models”, says Marchal.

“Our team has successfully contributed to numerous projects, giving us extensive experience in AI security management. By leveraging our background in security and compliance testing for traditional systems, we apply these insights to strengthen AI systems, ensuring they meet the highest standards of performance and safety."