Autonomously moving drones and other robots must observe their environment and interpret their observations without interruption. Using current technologies, this requires more energy than the batteries of a lightweight device can provide. That is why VTT and its partners are developing a fast, safe and energy-efficient machine vision system inspired by the human vision system.

A machine vision system that observes the visible environment and interprets the observations made is the prerequisite for autonomous devices. To ensure speed and safety, the machine vision systems must use local computing. In self-driving cars, this can be achieved with LiDARs and computers, while in lighter devices, such as self-flying drones, batteries are not sufficient to power ordinary computer hardware.

The EU-funded MISEL (Multispectral Intelligent Vision System with Embedded Low-Power Neural Computing) project, coordinated by VTT, aims to develop a fast, reliable and energy-efficient machine vision system that could be used in devices such as drones, industrial and service robots and surveillance systems.

“In addition to algorithms, we are developing devices better suited for local perception and analysis than computers that use microprocessors and graphics cards. We are modelling it after the human vision system that works locally, rapidly and energy efficiently. It is essential that the system filters observations to be processed right from the start rather than taking a series of regular photos and going through all data they contain. Filtering is possible when the system focuses on events, i.e., on the changes in the scenes,” says Jacek Flak, Senior Scientist at VTT, who coordinates the MISEL project.

New sensor technology combined with machine learning

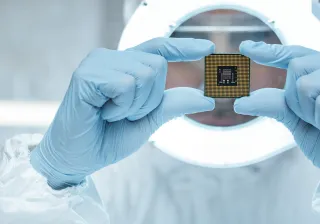

The MISEL project is developing a neuromorphic machine vision system, meaning that it mimics the functioning of the human brain, with three components. The first component in the system is a photodetector built with quantum dots sensitive to both visible and infrared light. Infrared sensitivity will allow for operation also in fog, rain or darkness.

In the same manner as the eye retina, the sensor selects and compresses data and forwards it. The second components in the chain mimics the cerebellum, located at the back of the head, and can, for instance, give instructions for a rapid manoeuvre (reflex). The third components in the chain is a processor imitating the cortex. It makes a deeper analysis of data and directs the sensor to focus on objects of interest. The project aims to employ machine learning methods to teach the system to identify different events, such as distinguishing a flying drone from a bird, and to anticipate chains of events.

In addition to coordination, VTT’s role in the project is to focus on developing a processor mimicking the functioning of the cerebral cortex and a novel non-volatile memory. Furthermore, VTT is responsible for the compatibility of the different technologies and the integration of the different components with the processors.

In addition to VTT, the four-year MISEL project, launched at the beginning of 2021, involves the following eight partners: AMO GmbH (Germany), the University of Wuppertal (Germany), the Fraunhofer Centre for Applied Nanotechnology (Germany), Kovilta Oy (Finland), the University of Santiago de Compostela (Spain), Lodz University of Technology (Poland), Laboratoire national de métrologie et d'Essais (France) and Lund University (Sweden). The EU's Horizon 2020 programme funds the project with EUR 4.96 million.