It's a matter of trends what counts as artificial intelligence, says VTT’s Research Professor Heikki Ailisto. When AI is fashionable, everyone investigates it – at other times we talk about robotics, data analytics or machine vision, for example. VTT has been bringing these themes forward since the 1980s. The focus has shifted from symbolic knowledge to data-based AI and from toy problems to real needs.

The early development of AI began in the 1950s, but a stronger interest towards it started in the 1980s. Heikki Ailisto started his work with AI around the same time, first as machine vision researcher at the University of Oulu. In 1986, he transferred to VTT.

”VTT was at the forefront of research on AI: We had four AI teams with slightly different focuses. Machine vision was one of our themes and I worked on it until the end of 1990s. Later, in the 2010s, I returned to AI applications”, says Ailisto.

Hype and silence take turns in AI debate

During Ailisto's career, AI was first on everyone's lips at the turn of the 1980s and 1990s. At that time, the enthusiasm was about expert systems. They were based on rules and aimed to solve practical problems – they could recommend a suitable device for someone buying a camera, for example.

”These were toy problems – questions that resembled a real problem but were simplified so that even a piteous algorithm could solve them. Another similar hot topic was the LISP language, which was often run on Symbolics computers intended for this purpose. Enthusiasm for both ended soon”, says Ailisto.

The short-lived hype was followed by a quiet period of about ten years in the 1990s, when AI was not much of a topic. Even then, VTT researched pattern recognition, data analytics and machine learning, for example. In the early 2000s, research topics were agent systems and ontology structures, i.e., knowledge bases.

”Agent systems were based on entities, implemented as computer programs, that were autonomous to some extent. These agents could receive information, analyze it, make decisions, and communicate with other agents. The research on ontology structures, on the other hand, aimed to form structural knowledge bases and related rules that could be used to describe the operation of a process or machine, for example."

Deep neural networks revolutionized machine learning

In the early 2010s, we saw a breakthrough in machine learning based AI. This advancement was rooted in neural networks that mimic brain's activity in a very simple way. Mathematicians – among them Seppo Linnainmaa, who also worked at VTT – had developed the theory of neural networks and learning methods in the 1960s and 70s. In the 2010s, the time was ripe for even larger neural networks.

”Many factors enabled this development. There was a huge amount of data available, the computing capacity of computers had increased, and the graphics processors made for games were suitable for the calculations required by neural networks. In 2012, a trio of Canadian researchers launched the term Deep Neural Network”, explains Ailisto.

Images, for example, could be fed into the deep neural network, and with the help of abundant teaching material, machine vision could soon recognize facial images even more reliably than the average person. By 2015, methods based on deep neural networks surpassed all previous image recognition methods.

”This was an important milestone. With the help of new methods, it was also possible to predict other phenomena. Many physical phenomena are so complex, difficult, or imprecise that they cannot be modelled using accurate physics models. With neural networks and data-driven approach, these phenomena can be modelled without fully understanding them. This was first used in targeted advertising and risk forecasts in the insurance industry, among others.”

The AI debate is accelerating – attitudes vary around the world

The conversation about AI has intensified in the last five years, and the expectations have grown. AI has been predicted to bring economic growth, increase work productivity, and revolutionize the labor market. According to Ailisto, the effects have so far been less than expected; significant but not revolutionary.

In 2017, Ailisto studied AI strategies in different countries. ”In Russia, it’s considered that whoever controls AI controls the world. In North America, the topic is seen from a business perspective. Many smaller countries want to be at the forefront of development and achieve a competitive advantage – Finland is one of them.”

Considering the size of the nation, Finland has strong AI research and training in the field. Finnish listed companies use AI in their operations, and new companies based on AI have also emerged.

”However, the technological base comes from elsewhere: we don’t have companies like Google or Amazon that develop AI technology. Most of our companies are at the same level as other European companies, and behind East Asia or North America”, Ailisto points out.

In Finland, AI is used with caution also in the public sector. For example, in the reform of social welfare and healthcare, AI could have solved problems of digitization, but so far this has remained minimal. GDPR and privacy protection bring challenges to the application of AI and prevent it to some extent; we prefer to play it safe when implementing solutions.

Future development in different countries is influenced by both regulation and attitudes. ”Regulation is stricter in Europe than in North America or especially China. Attitudes, on the other hand, are most positive in East Asia and most negative in Africa and South America – North America and Europe are in the middle.”

Development also depends on funding. ”Investments in AI increased until 2021, but there was a slight drop in 2022. Investors want to see results and find new targets. We'll see if funding increases again with the rise of generative AI.”

Will generative AI deliver on its promises?

During his career, Ailisto has witnessed a few times important boundaries of AI being broken. One example is the Turing test, which measures the human-likeness of AI. ”In the experiment, whether a person recognizes as talking to a person or a machine is being observed. According to several experts AI is now passing the test.”

AI has brought a vast number of new tools to research. These are in wide use at VTT. ”I made a survey about this a few years ago. Around 100 of our 2,000 researchers used AI methods regularly at that time already.”

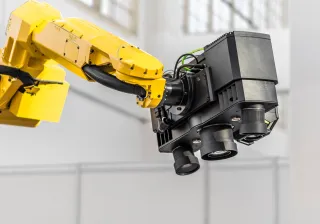

There is a broad field of applications for AI in research: it can be used for modelling molecular structures in materials science, for process development in industry, or in healthcare, for example. Traditional applications for tasks like production and process control include analysis and pattern recognition of signals produced by sensors, optical meters and cameras, as well as robotics and autonomous vehicles. All these areas have been studied at VTT for a long time.

The latest leap in the development of AI was seen a year ago, when generative AI and large language models hit through in public. Many were surprised by their amazing performance in producing average text. We are once again expecting a big breakthrough.

”It is still uncertain how useful generative AI will prove to be. One scenario is that the use of AI becomes commonplace. If the methods are inexpensively available to everyone, this can lead to some degree of democratization of using AI," states Ailisto.